Gamma and Linear Space - What They Are and How They Differ

Linear space lighting is a term that game developers are becoming ever more used to hearing as games reach for the next level of realism with physically based rendering models (PBR). Though linear space and its counterpart, gamma space, are fairly simple and important concepts to understand, many developers don't learn what these terms really mean. This document will define gamma and linear space, how they differ, and how it applies to the Unity engine.

Linear Space

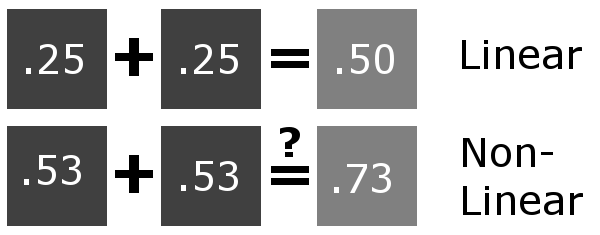

First we need to know what linear color space is. Simply, it means that numerical intensity values correspond proportionally to their perceived intensity. This means that the colors can be added and multiplied correctly. A color space without that property is called ”non-linear”. Below is an example where an intensity value is doubled in a linear and a non-linear color space. While the corresponding numerical values in linear space are correct, in the non-linear space (gamma = 0.45, more on this later) we can’t simply double the value to get the correct intensity.

Doubling the intensity of a dark grey square in a linear and non-linear space

Gamma Space

The need for gamma arises for two main reasons: The first is that screens have a non-linear response to intensity. The other is that the human eye can tell the difference between darker shades better than lighter shades. This means that when images are compressed to save space, we want to have greater accuracy for dark intensities at the expense of lighter intensities. Both of these problems are resolved using gamma correction, which is to say the intensity of every pixel in an image is put through a power function. Specifically, gamma is the name given to the power applied to the image.

A graph of pow(x, gamma)

Looking at the above graph, it is apparent that any gamma aside from 1 is a non-linear space.

Two common gamma values applied to the center image

Most images are stored with a gamma of 0.45 applied to them, which will have the effect demonstrated in the above left image. The darker regions of the image are now recorded using a greater range of values while bright ranges are compressed. Images stored like this are in “gamma space”. For example, a neutral grey in an image doesn’t have a numerical intensity of 0.5, rather it is around 0.73, while pure whites and blacks remain the same. This is the default behavior of nearly every digital camera, as well as image editing applications and so on. In fact, nearly every image you see on your computer has that gamma applied to it.

You may be wondering why images display correctly, and don’t look too bright. This is where the non-linear response of displays comes in. CRT screens, simply by how they work, apply a gamma of around 2.2, and modern LCD screens are designed to mimic that behavior. A gamma of 2.2, the reciprocal of 0.45, when applied to the brightened images will darken them, leaving the original image.

For example, the above left image represents what is stored on your computer, and when that is displayed, the result looks like the center image. The left image has been “gamma corrected”, which means it has a gamma value such that it will be displayed correctly after the screen’s gamma is applied to the image. If instead the computer is storing the center image, which has not been gamma corrected, the displayed result looks like the right image, clearly unwanted behavior.

Color Spaces and the Rendering Pipeline

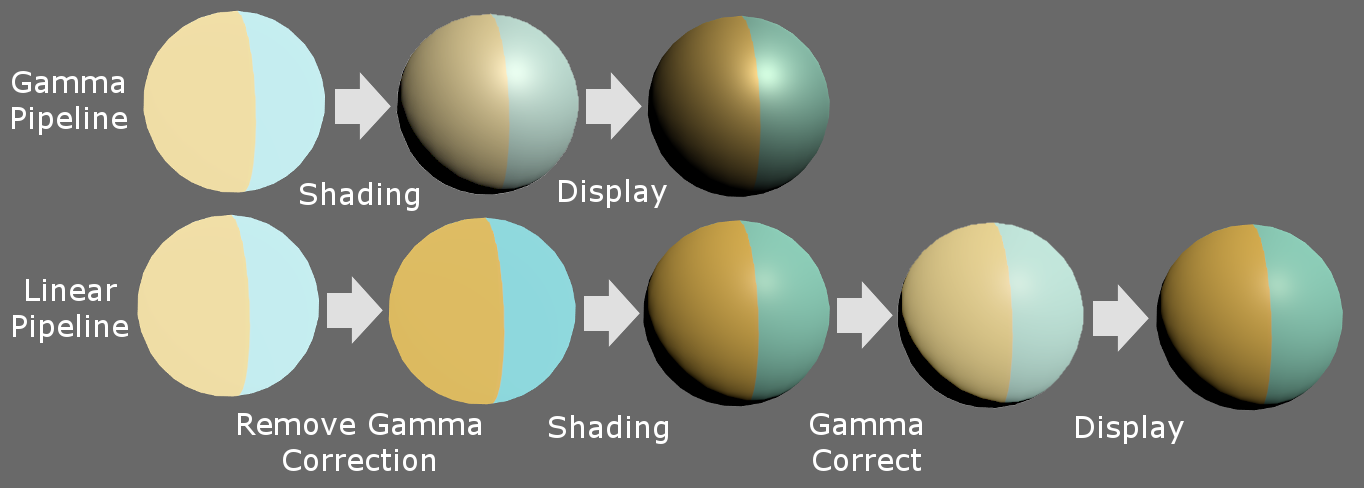

When using the gamma pipeline in rendering, textures are passed into shaders, gamma corrected. Next the lighting is calculated. After, the final image is output to the display and adjusted by the display’s gamma value. This behavior, while simple, is not physically correct. In real life, light behaves linearly, which means that each contribution from multiple light sources added together give the correct intensity. Because of this, shading is done in linear space, but in the gamma pipeline the input colors and textures remain in gamma space. This means the result of the shading is not truly accurate, but after the display’s correction it is often good enough. However, with increasing demand for immersive, photo-real rendering, this is no longer a suitable method.

Therefore, typical practice in PBR is to use a linear pipeline. Here, the input colors and textures have their gamma correction removed before shading, putting them into linear space. When shaded, the result is physically correct because the shading process and inputs are all in the same space. After, any post effects should be computed while the frame is still in linear space, as post effects are typically linear, much like shading. Finally the image is then gamma corrected so it will have the proper intensity after the display’s gamma adjustments.

A comparison of Gamma and Linear pipelines

Above you can see the difference between the end result of the gamma and linear pipelines on a simple sphere. Notice the brighter specular highlight and stronger falloff of the light in gamma space. These are examples of unrealistic behavior, and will make achieving photo-realism difficult.

Color Spaces in Unity

Fortunately, Unity is able to switch between color spaces very easily, and for many projects will seamlessly work with your rendering pipeline. By default, Unity uses gamma space as only PC, Xbox, and PlayStation platforms support linear rendering. For these platforms, to change between linear and gamma space, go to:

Edit -> Project Settings -> Player -> Other Settings

Here, there will be an option called Color Space, where you can choose either Linear or Gamma. It’s as easy as that! Shaders should now receive non-gamma corrected textures. Do note that if your project is already in development, you’ll likely need to rework the lighting and various textures to produce a good result, since the rendered scene will not look the same as before. If you had any lightmaps baked, they will need to be re-baked to be correct.

When linear color space and HDR are used, all post effects will be done by Unity in a full linear space. However, when only linear color space is enabled, Unity will use a gamma framebuffer, but fortunately when reading and writing to this buffer, Unity will automatically convert the values’ color space properly so that the image effects are still done in linear space.

Although Unity does not support the default linear pipeline on some platforms such as mobile. It is possible to do so yourself within shaders. This is done by applying the pow() function to gamma corrected input textures to transform the inputs to linear space, and applying pow() again before returning the result to put it back in gamma space. Note that this method will be computationally expensive, so be aware of the capabilities of your target devices and use it only where needed.

Conclusion

Hopefully, you have gained a further understanding of gamma and linear space and now know where to begin applying these principles to your projects. If you are making a lot of custom changes to Unity’s rendering pipeline, shaders, and post effects, you’ll need to strongly grasp the fundamentals to avoid common mistakes in color management that can drag your image quality down. When implemented correctly however, a linear rendering pipeline is a key part on the road to delivering immersive, realistic worlds.

To learn more about our team at KinematicSoup and how we are working to help Unity developers build better games faster, check out our real-time multi-user Unity scene collaboration tool, Scene Fusion.

Further Reading

http://docs.unity3d.com/Manual/LinearLighting.html

http://http.developer.nvidia.com/GPUGems3/gpugems3_ch24.html

http://www.slideshare.net/ozlael/hable-john-uncharted2-hdr-lighting

This blog was written by Scott Sewell, a developer for KinematicSoup.